IA Computer (2024) (automatically translated)

The world of artificial intelligence or AI and especially generative AI has boomed in recent years since the release of ChatGPT in 2021. There is a lot of hype about this technology and there is a lot of talk about it being the future. My curiosity has led me to want to learn how this technology works and how to apply it to my day-to-day life. And if there is one thing I have learned as an engineering student is that the best way to learn how something works is to build it yourself.

That is why I decided to build in parts a computer powerful enough to be able to run and play with some of these AI models locally. The decision to build this desktop computer in parts has been partly influenced by this video.

Up until now I have had an HP laptop which has served me well during my career. However a laptop is always going to be less powerful than a desktop computer with the same components because of thermal limitations. I decided to sell my laptop to build this one. The disadvantage of course is portability, however as mentioned in the video there is a workaround for this: using remote desktop via ssh connection. So I have installed on my tablet a client to make this connection. This way I can use my desktop computer wherever I take my tablet.

Components:

Nvidia GeForce RTX 3060 LHR

One thing that has stuck with me in researching which components to choose is that the most important component for running AI models is the graphics card. This is because inference algorithms are highly parallelizable. Inference is the processing that these models perform to generate an output from an input, be it text, video, audio or any other type of information. This processing involves a large number of matrix operations calculations that can be carried out in parallel. GPUs (Graphic Processing Units) are designed, as their name suggests, to generate graphics. The computation of these graphics also consists of a large number of simple operations that can be performed simultaneously. Because of this similarity, GPUs are much more suitable for executing these models than CPUs whose purpose is to execute operations in a general way.

The computational capacity measured in TFLOPs is important since the higher the capacity the faster the inference speed but the most important parameter is the VRAM. VRAM is the memory of the graphics card and is the limiting factor when running a model. Before running, the model has to be loaded into memory (VRAM). The larger the VRAM the larger the models we can run and this is important because larger models tend to be smarter and perform better.

Another factor to take into account is the manufacturer. Nvidia has been a pioneer in adapting its products for the development of artificial intelligence applications. The drivers offered by the company allow access to GPU resources through the “CUDA” programming language. This makes the platform highly optimizable for such applications. This is why the AI boom has made this company the most valuable in the world.

The market for graphics cards is unpredictable and in the last few years the demand has increased a lot so they are quite expensive. In my case I was lucky to find an Nvidia 3060 for 200€ second hand. It is a mid-low range card of penultimate generation. What is special about this card is that although it is not particularly powerful, it has 12GB of VRAM similar to some high-end cards and this is very good for this application.

CPU AMD Ryzen 7 8700G

The Central Processing Unit (CPU) of choice is a mid-high-end AMD Ryzen 7 of the penultimate generation for €280. In addition to being a very capable general purpose processor with 12 processing threads, this CPU comes with very powerful integrated graphics. This can take load off the graphics card if it is used, for example, for rendering display graphics. In addition, if the model is too large, it can be split to store part of the model in the main memory (RAM) and the CPU with the graphics can run the other part of the model. Although this is not always desirable since the CPU would act as a bottleneck, slowing down the inference considerably.

MSI PRO B650M-A WIFI

The motherboard chosen is the mid-range MSI B650 adapted for AMD processors with socket AM5 for 142€. The form factor of the motherboard is micro-ATX. Desktop components follow a standard created in 1995 called ATX. Micro-ATX motherboards are somewhat more compact and usually have fewer peripherals than ATX motherboards but are equal in all other features. They are also cheaper. In this case, the important thing about this board is that it offers two PCIe ports to connect two graphics cards if necessary and that it has a high clock speed for memory (5200 Mz).

Cooler Master

For the CPU I have chosen a cheap 23€ Cooler Master heatsink with 8 copper pipes. While the CPU is quite powerful, it is also very efficient. CPU power consumption is measured in watts with a parameter called TDP (“Thermal Design Power”). The TDP of this CPU is 65W while the TDP for some CPUs in the same range is around 200W. That is why I have not decided not to spend the money on a liquid cooler or a big and bulky heatsink.

CRUCIAL 2x16GB

The system is equipped with 32GB of state-of-the-art RAM (DDR5). In case part of the model does not fit in the VRAM it can be loaded into the 32GB of main memory. This cost me 119€.

SSD 2TB

The system has as external memory a 2TB SATA SSD that I had bought for my laptop. This will give me plenty of space to store the models I download. The memories with SATA interface are not as fast as NVme but HDD hard disks are much faster. I seem to remember that it cost me about 100€.

PSU Gibabyte

With all the previous components decided I used this calculator to make an estimation of how much power the system demands and with that choose the power supply. I decided to buy a 750 W power supply which is much more than what I need but it will allow me in the future to make improvements such as adding another graphics card, more RAM or another SSD.

It is not good to try to cut costs too much in this case because a bad power supply can compromise the rest of the components if it fails or breaks down. I decided to buy a PSU from Gibabyte for 99€.

At this point the computer worked perfectly but it was not the most aesthetic nor the safest to have all the components exposed so I finally decided to buy a box in conditions for 59 €:

The total cost counting, including the SSD, computer is approximately 1020€. I think it is quite cheap for the capabilities it offers. I have looked at this computer which is worth 1700 and has similar specifications.

Caja

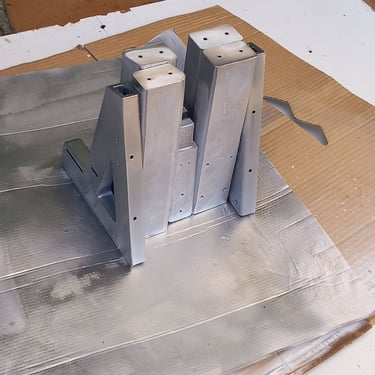

Trying to cut costs I had the not so good idea to print my own computer case. Seeing that the task was going to take me time and printing attempts I decided to just print only a structure or chassis to support the components. Something like a test bench. I downloaded this model and modified it to fit the micro-ATX form factor of my motherboard. I used my 3D printer, PLA and a can of metallic paint I had. This is the result.

What have I used ir for so far?

These are some of the artificial intelligence applications I have used it for:

LM Studio: is a program that allows you to download LLMs (Large Language Models) and run them locally in a very simple way using a graphical interface.

Ollama: It is a terminal application offered by Meta that also allows to download and run LLMs locally. Both LM Studio and Ollama are created from the llamacpp library (from C++).

Whisper: This is a model released by OpenAI capable of transcribing any audio to text. I used it to generate the subtitles of an anime I was watching.

Image Generation: I used a model called Flux1.0_dev released by Black Forest for generating images from text. This model is the same model used by Grok, the AI of X (Twitter), to generate images. I have used ConfyUI an interface to precisely control image generation models. Some examples:

The AI application that I use the most on a daily basis is Ollama with Llama 3.1 the latest model released by Meta. It is very convenient to have it quickly accessible on the command line and it is also very fast since it runs locally with no latency and has a practically dedicated graphics.

Of course this computer is not only built for this purpose. Having a powerful computer will also come in handy for heavy engineering applications and many other things.

Future upgrades

Here are some improvements I may make in the future:

Another graphics card: adding another graphics card can increase the speed and capability of the models. Simplifying a bit, you can split an AI model, store each of those parts on a graphics card and run them serially.

Add two more 16 GB RAM modules.

Add a Nvme SSD memory to speed up program startup and the process of loading AI models into memory.